How To Understand Confidence Level for Better Machine Learning

July 22, 2021 | 6 minutes read

As machine learning software has ushered in the automation of various technological processes, there must be some metric to gauge the accuracy and effectiveness of this automation. In the context of video editing, redaction, and transcription software, this is done by providing users with confidence levels concerning all automatic functions that were performed.

To give an example, an automatic transcription software program will provide a confidence level about the accuracy of the words that were said in the video or audio file. With these confidence levels, consumers can correct any mistakes or errors that may take place during the automation process. In this way, users of these software offerings can rest assured that they are putting out the highest quality of work at all times and avoiding any unnecessary errors.

What is the definition of confidence-level machine learning?

Machine learning confidence levels provide users with a metric concerning the effectiveness of the automation process. This is typically done through percentages ranging from 0% to 100%. The higher the percentage of the confidence level, the more effective the automatic software program was in performing its specific function. These confidence levels can come in a range of structures or forms.

In the context of the automatic transcription process, this confidence level represents the software’s effectiveness in transcribing words from a given audio file. After transcribing an audio file, an automatic transcription software program will underline any words that have a low confidence level as they pertain to word detection, whether it be due to an accent or the volume of the speaker’s voice, and provide a specific accuracy based on percentages. Moreover, these underlined words will also have colors that correspond to specific accuracy ranges, (20%-40%, 40% – 60%, 60%-80%, etc.) so that users can easily go back and correct any mistakes with as little time and effort as possible.

Alternatively, confidence levels in the context of video redaction software and object detection will represent the accuracy of the software in detecting various objects in video recordings. As video recordings can contain a multitude of moving objects at different angles coming in or out of frame at different times, an automatic video redaction software program will inevitably misidentify certain objects, sometimes due to video resolution, object being very far in the scene, darkness, weather, and many other reasons.

After the automatic video redaction process has been completed, all objects that have been detected will be displayed to the user, along with a confidence level relating to the accuracy of these detections. Much like the automatic transcription process, consumers can use these confidence levels to check the detection and make sure the software detects all objects as accurately as possible.

How are confidence levels used in the context of object detection?

When automatically detecting faces, people, cars, license plates, or any other form of object in a video file, a confidence level will be provided for each detection that will allow users to assess how accurate a given detection was. In the example below, the object detection feature was used to scan a video and pick up any license plates that were found. The software provides users with a confidence level ranging from 0% to 100%, and these margins can be set before initiating the video redaction process. For example, in a video that may be of a lower quality, the confidence level could be capped at 80% as opposed to 100%, to detect more license plates.

What’s more, the detection panel also provides users with a thumbnail of the detection in question, as well as options that allow users to select the specific point in the video in which the particular detection was made. This way, users can be confident that the video detection software picked up the objects that need to be redacted from the video.

In addition to this, users also can delete or disable any object detections that they find unsuitable for the final product that they are seeking to generate. In the example below, the automatic video detection software provides users with a confidence level of 81%. As the thumbnail in question is a license plate, users can rest assured that the system has completed an accurate redaction.

Conversely, the automatic video redaction software will also provide lower confidence levels for objects that may have been poorly detected. In the example below, the software has mistakenly detected a car mirror as being a license plate. This is reflected not only by a confidence level of 24% but also by a thumbnail that showcases the exact image that was detected during the redaction process. What’s more, users will also have the ability to click on the disable and lock buttons, which are represented by the eye symbol and lock symbol respectively, should they want to remove a detection that picked up an incorrect object.

How are confidence levels used in the context of transcription?

Much like the automatic video redaction process, the automatic transcription process will also provide users with a confidence level concerning the effectiveness of the transcription process. In the case of automatic transcription, words that have a low confidence level will be underlined after the transcription has been completed.

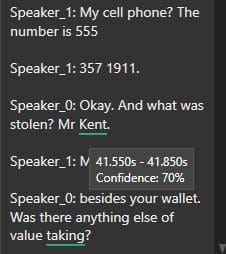

The example below illustrates a sample 911 call between two speakers and highlights any words that were estimated to have been missed by the software. Users can then toggle over these underlined words to be provided with a confidence level percentage running from 0 to 100. Moreover, a timestamp from the audio recording that corresponds to the words in the transcription will also be provided for the user. In the case of the example displayed below, the words “any time” have been given a 48% confidence level, accompanied by a red underline to denote the level of confidence.

Alternatively, the example below shows a slightly higher confidence level of 59% for the word “is”. As was the case in the previous example, the automatic transcription software has also underlined this word due to its confidence level. However, as this confidence level of 59% is higher than the previous example of 48%, the software has underlined this word in another color so that users can quickly scan their transcriptions and get an understanding of how effective the transcription process was. Furthermore, words that have a slightly higher confidence level than 48% or 59% were represented with a green underline, as evidenced by the world usually in the example above.

As using complex software can be both a confusing and daunting task, a machine learning’s confidence level is geared towards providing users with both a visual and analytical approach to achieving the highest standard of work in their respective professions or fields. Even if a word has a lower confidence level and has been underlined, users can still listen to the transcription to check if the software may have made a mistake.

On the contrary, users can also watch videos themselves after having automatically redacted them to ensure that all objects were detected correctly throughout the recording. Whether you are looking to automatically redact audio or video files, confidence levels are a metric that can be used to make the process exponentially easier.